AI Adoption in Continuous Improvement: What’s Holding Us Back?

This summer, we surveyed professionals in Continuous Improvement, Quality, and Operational Excellence to understand how AI is being adopted, the barriers and enablers to integration, and the skills needed to stay relevant in an AI-driven future. Participants included senior leaders, CI managers, data specialists and consultants from a range of sectors. The insights, gained from 42 contributors, provide a grounded view of what’s happening in organisations, rather than assumptions or hype.

In previous articles, we’ve explored how AI is being used and its perceived impact. In this article, we shift focus to the barriers that are preventing wider adoption.

Skills barriers – an issue of readiness rather than resistance

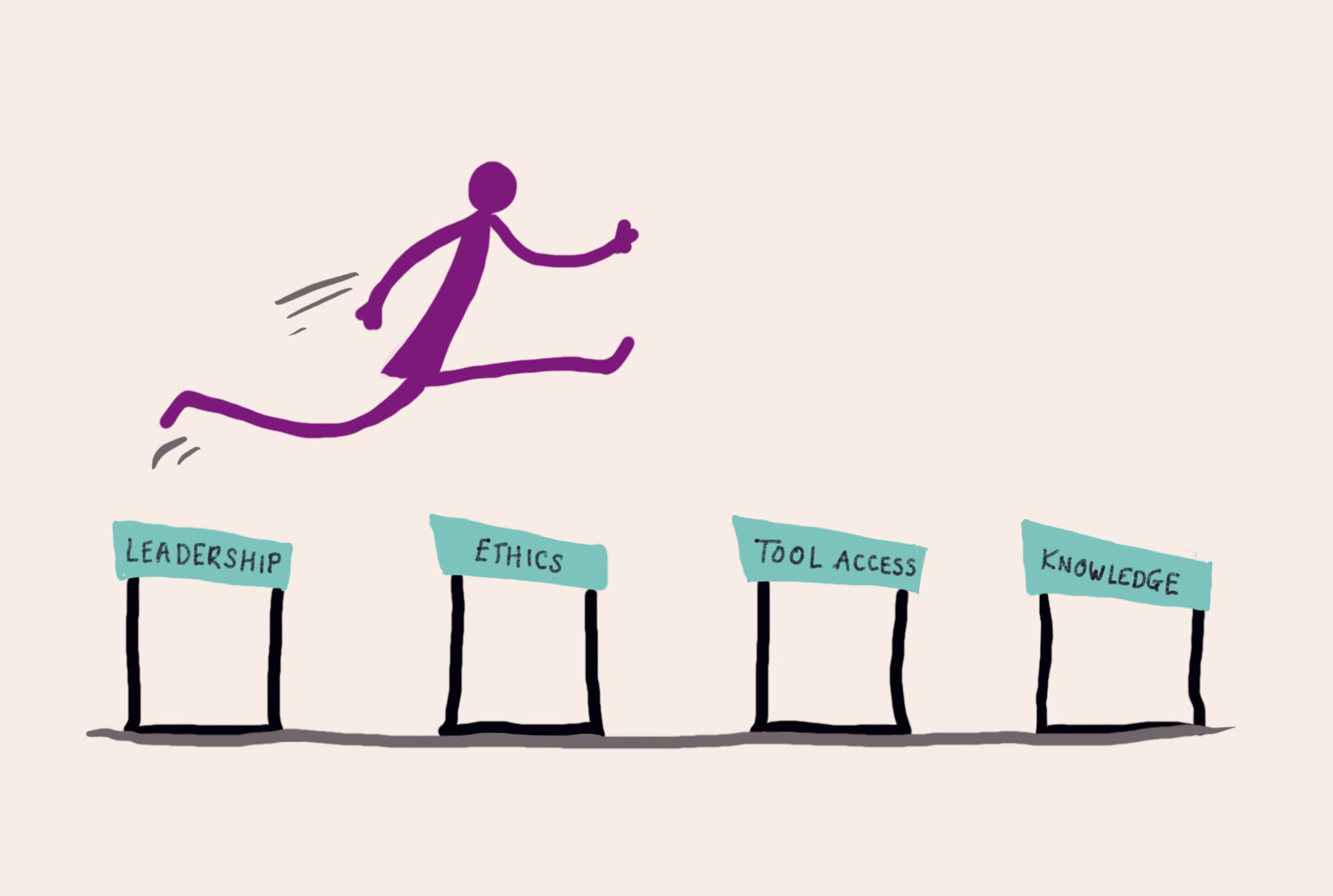

We asked contributors, “To what extent are there barriers to using AI in your work?”, exploring these elements in particular: lack of knowledge/training; tool access or technical limitations; ethical/compliance concerns; resistance or lack of leadership. These represent the most common and impactful barriers faced by organisations adopting AI and influence every phase of AI adoption, from initial planning through full deployment.

Knowledge and skills emerged as the most significant barrier. 34% of respondents rated lack of knowledge or training as either a major or critical impediment and 39% cited it as moderate, revealing that 73% in total find a lack of knowledge or training is slowing their progress, impeding progress significantly, or presenting a total barrier. This finding highlights a gap between interest and capability: professionals told us they perceive AI is beneficial to their work, yet they report gaps in competence when it comes to using it.

This links to a broader pattern we observed in earlier articles: AI adoption is wide but not deep. All but one of the participants has experimented with AI tools, particularly generative AI, but usage tends to be ad hoc rather than strategically embedded. Without a clear roadmap and investment in skills, AI is functioning more as a tactical enabler than as a driver of transformative change. The result is a classic case of waste – people want to engage, but they don’t have the knowledge or structured support to do so.

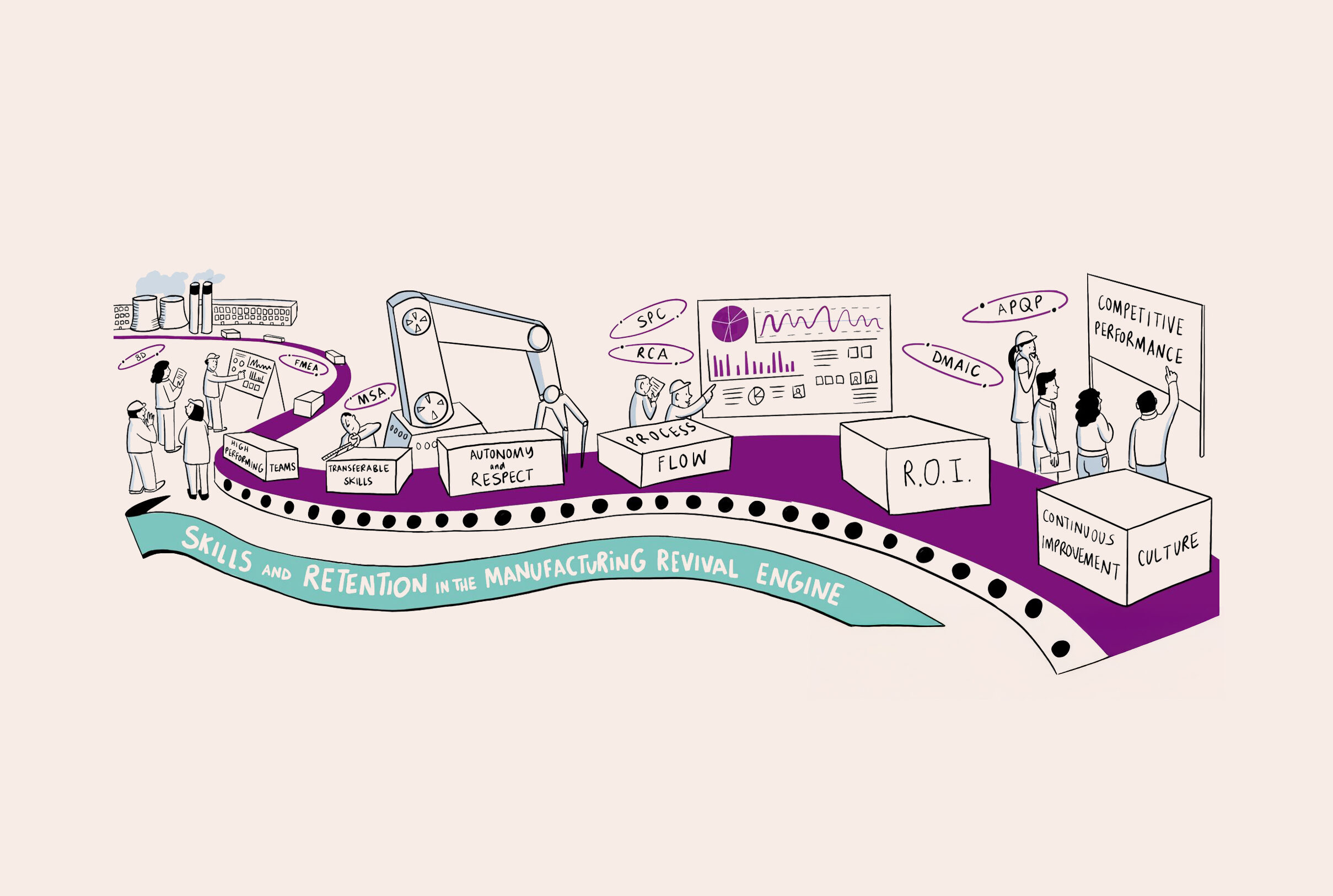

The implications are significant. When professionals lack the skills to integrate AI into problem-solving, process analysis, and decision-making, we miss opportunities for efficiency and innovation. This is particularly evident in sectors such as manufacturing, where respondents reported enthusiasm for AI but also the highest proportion of critical barriers. In these environments, the complexity of processes and the need for precision amplify the importance of technical fluency and data literacy. Conversely, service-based sectors show slightly higher adoption rates, suggesting that simpler workflows and customer-facing applications may make AI easier to implement, though even here, capability gaps exist.

Tool access and technical limitations

Tool access and technical limitations are a barrier for respondents, but primarily at a minor or moderate level. 22% of respondents cited this as a major or critical barrier, and 32% cited it as moderate. The combined 54% indicates that tool access is dragging progress notably.

The issues reported include limited access to AI tools, particularly enterprise-grade solutions, as well as integration challenges when trying to embed AI into existing workflows. Respondents also highlighted data quality concerns which undermine the effectiveness of AI models and create additional work for teams. While only a small proportion rated this as a critical barrier, the cumulative effect of moderate and minor obstacles means technical limitations present a significant friction point in scaling AI beyond pilot projects.

Ethics – where technology meets culture

Responses on ethical and compliance challenges paint a nuanced picture. 29% of respondents rate it as a critical or major, and 22% rated it as a moderate factor that is slowing their progress. The remaining half either perceive no issue or only a minor inconvenience. This bimodal distribution suggests a split: for some, ethics is a non-issue, while for others, particularly those in regulated sectors, it is a significant constraint.

The concerns raised go beyond abstract principles. Respondents highlighted data privacy, confidentiality, and the “black box” nature of AI models, which makes it difficult to assure transparency and accountability. There were also worries about bias in AI outputs, the risk of over-reliance on automated decisions, and the potential erosion of human judgment in customer-facing processes. In regulated industries such as finance and healthcare, these issues are amplified by strict compliance requirements, making ethical governance a prerequisite for adoption.

Interestingly, some comments reflected cultural and behavioural risks: the temptation to use AI shortcuts, the spread of “badge-based” expertise without deep understanding, and the fear that efficiency gains could lead to unrealistic performance expectations. These insights underline that ethical concerns are not just technical, they intersect with organisational culture and professional integrity.

Leadership Resistance: A Small Barrier with Big Consequences

19.5% of respondents cited resistance or lack of leadership as a major or critical barrier to using AI in their work, and a further 19.5% rated it as a moderate barrier. While this is not the biggest barrier reported by our respondents, it is far from irrelevant.

Leadership may not be the biggest barrier compared to knowledge gaps, but it could be the lever that determines whether AI moves beyond experimentation into strategic integration. Of note is that even among those who see leadership resistance as a significant barrier, every single respondent believed AI will become essential within three years. Could it be that the issue is not scepticism about AI’s value, but structural and cultural readiness?

We’ve learned through our research that professionals in our field overwhelmingly believe AI will become essential within three years, yet gaps in knowledge, technical access, and leadership alignment are slowing progress. The most significant obstacle is skills. Without investment in training and capability-building, AI remains a tactical tool rather than a transformative force. Leadership plays a pivotal role. While not the most common barrier, its influence is decisive: where sponsorship and vision are absent, AI initiatives stall at the pilot stage. Ethical and cultural considerations add further complexity, reminding us that AI governance requires both technical safeguards and cultural alignment.

The data points to a clear priority: organisations should address capability gaps and provide structured support at all levels if they want AI to move from tactical use to transformative impact. Without it, the promise of AI will remain unrealised in Continuous Improvement, Quality and Operational Excellence.

How is your team tackling these challenges? Add your insights and help shape the discussion.